Identity Crisis: Memorization and Generalization under Extreme Overparameterization - ICRL2020

What is inductive or learning bias?

In a machine learning model inductive bias refers to the set of assumptions affecting

How to study it?

In this paper a framework to better analyze inductive bias is presented. Starting from the observation that modern deep NNs are largely overparameterized.

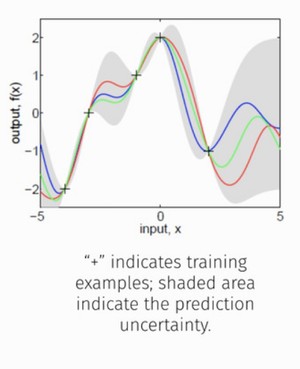

Predictions in the vicinity of the training samples are regularized by continuity or smoothness constraints, while predictions further away from training samples determine generalizations performances. The different behaviors reflect the learning bias of the model and the learning algorithm.

Predictions in the vicinity of the training samples are regularized by continuity or smoothness constraints, while predictions further away from training samples determine **generalizations** performances. The different behaviors reflect the learning bias of the model and the learning algorithm.

In order to better understand this behavior the authors use two approaches:

- Learning with one example

- Extreme overparameterization

- Easy to determine distance from the training example

- Learning the identity map \(f(x)=x\)

- Requires all input features to be transmitted to the output

- Affords detailed analysis of visualization and model behaviour

Find the paper here! ICLR2020.